Introduction

Just yesterday, on May 13, 2024, OpenAI introduced something big: GPT-4o. This incredible AI model comes at a time when a lot of people, about 1.6 billion, visit openai.com each month. GPT-4o poses as the next leap in AI, promising to make talking to computers more accessible and fun.

In this easy-to-follow guide, we'll explore what GPT-4o can do, how it works, and how you can use it. Whether you're new to AI or already a fan, let's dive in together and discover the fantastic possibilities of GPT-4o.

Understanding GPT-4o

What is GPT-4o?

-

GPT-4o, short for "GPT-4 Omni," is OpenAI's latest innovation, designed to revolutionize human-computer interactions.

-

Unlike previous models, GPT-4o is a multimodal AI that can understand and respond to text, audio, and images.

-

It has the same high intelligence as the GPT-4 Turbo but with significant improvements in speed, cost, and capabilities:

Why is GPT-4o Important?

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: https://t.co/MYHZB79UqN

— OpenAI (@OpenAI) May 13, 2024

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. pic.twitter.com/uuthKZyzYx

-

GPT-4o represents a significant advancement in AI technology, offering more natural and versatile interactions.

-

It streamlines tasks by allowing users to input information in various formats and receive responses accordingly.

-

Integrating text, audio, and image processing into a single model enhances efficiency and user experience.

Comparison with Previous Models

-

GPT-4o builds upon the success of its predecessors, such as GPT-3, by incorporating multimodal capabilities.

-

Previous models often required separate models to handle different tasks, whereas GPT-4o handles all modalities in a unified manner.

-

This advancement reduces complexity and improves the overall performance of the AI model.

-

Here are other technical parameters comparing GPT-4o with previous models:

-

2x faster at generating text compared to GPT-4 Turbo

-

50% cheaper, costing $5 per million input tokens and $15 per million output tokens

-

5x higher rate limits up to 10 million tokens per minute

-

Enhanced vision capabilities across most tasks

-

Improved non-English language support with a new tokenizer

-

128K context window and knowledge cutoff date of October 2023

-

Supports understanding of video by converting to frames for input

-

Multimodal Capabilities Explained

-

Text: GPT-4o can generate responses and content based on textual inputs, making it versatile for writing, summarizing, and answering questions.

-

Audio: Users can engage in real-time conversations with GPT-4o using voice inputs, enabling hands-free interactions and voice commands.

-

Vision: GPT-4o can interpret and analyze images uploaded by users, allowing for tasks such as image recognition and object identification.

Significance of GPT-4o in Various Fields

-

Education: GPT-4o can assist students with learning by providing explanations, answering questions, and generating study materials.

-

Healthcare: Healthcare professionals can use GPT-4o for tasks like medical imaging analysis, patient communication, and research.

-

Business: GPT-4o offers valuable applications in customer service, advanced data analysis, and content creation, improving efficiency and productivity.

By understanding the fundamentals of GPT-4o, users can better leverage its capabilities and integrate it into various aspects of their lives and work.

Evolution of Voice Mode: From Latencies to Seamless Integration with GPT-4o

Previous Voice Mode Latencies

-

Before the introduction of GPT-4o, Voice Mode in ChatGPT exhibited significant latencies.

-

Users experienced average latencies of 2.8 seconds with GPT-3.5 and 5.4 seconds with GPT-4.

-

These latencies were primarily due to Voice Mode's reliance on a pipeline of three separate models for audio processing.

The Three-Model Pipeline

Voice Mode's operation involves three distinct models:

-

A simple model transcribed audio to text.

-

GPT-3.5 or GPT-4 processed the text input and generated a response.

-

Another simple model converted the text response back to audio for the user.

Limitations of the Three-Model Approach

Despite its functionality, the three-model pipeline had limitations:

-

Loss of Information: The primary model, GPT-3.5 or GPT-4, lost information during the process. It couldn't directly perceive tone, background noises, or emotions like laughter and singing.

-

Lack of Integration: Each model operated independently, hindering seamless integration and limiting the model's understanding of context.

GPT-4o: A Unified Solution

-

With the development of GPT-4o, OpenAI addressed these limitations by training a single model end-to-end across text, vision, and audio.

-

GPT-4o processes all inputs and outputs through the same neural network, ensuring a cohesive and integrated approach.

-

This unified model represents a significant step forward in AI technology, enabling more natural and intuitive interactions across modalities.

Future Potential of GPT-4o

-

Despite its advancements, GPT-4o is still in the early stages of exploration.

-

OpenAI acknowledges that they are only beginning to understand the model's capabilities and limitations.

-

As researchers and developers delve deeper, they anticipate uncovering new possibilities and refining the model's performance across various tasks and scenarios.

Capabilities of the GPT-4o Model

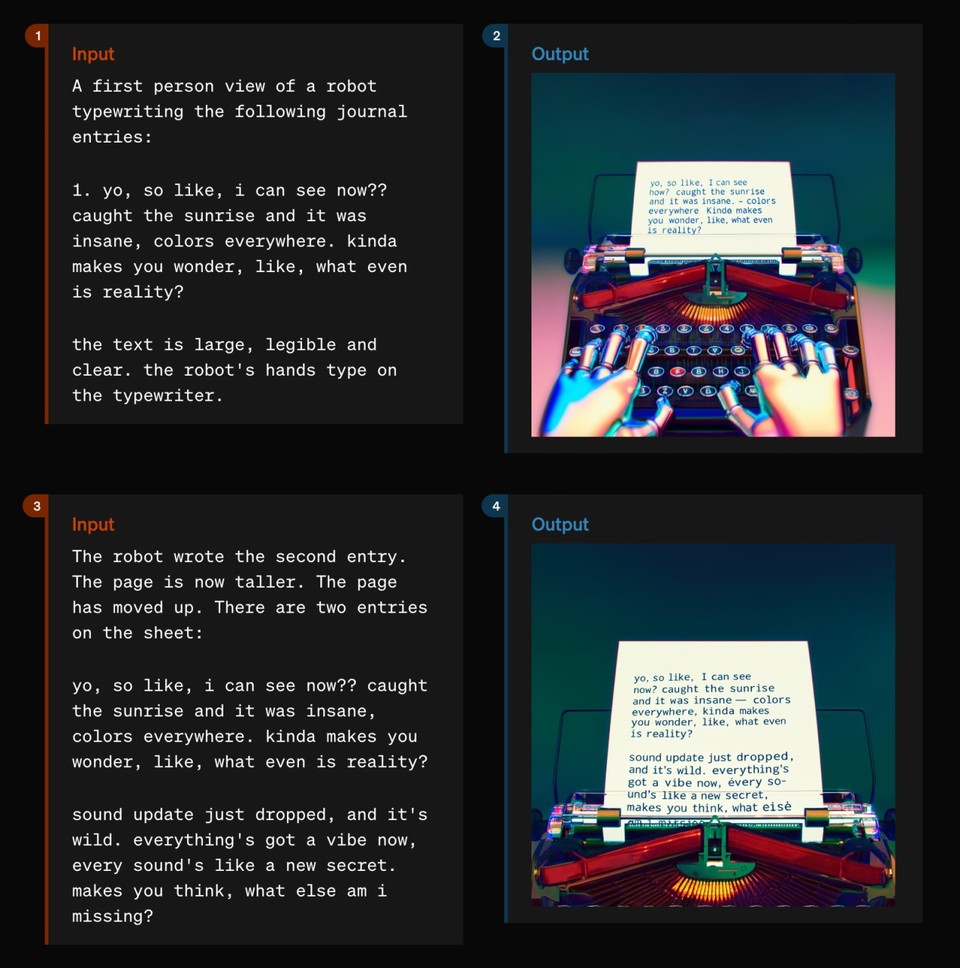

1. Improved Text in Image Generation

GPT-4o has enhanced capabilities in creating text-based images compared to previous models. It can generate images with clearer, more legible text that is better integrated into the overall image composition. It allows for more realistic and visually appealing text overlays in generated images.

Source: OpenAI

2. 3D Object Synthesis

In addition to its advancements in text-based image generation and font creation, GPT-4o also introduces improved capabilities in 3D object synthesis. This feature allows the model to generate three-dimensional objects based on textual descriptions or prompts provided by users.

Source: OpenAI

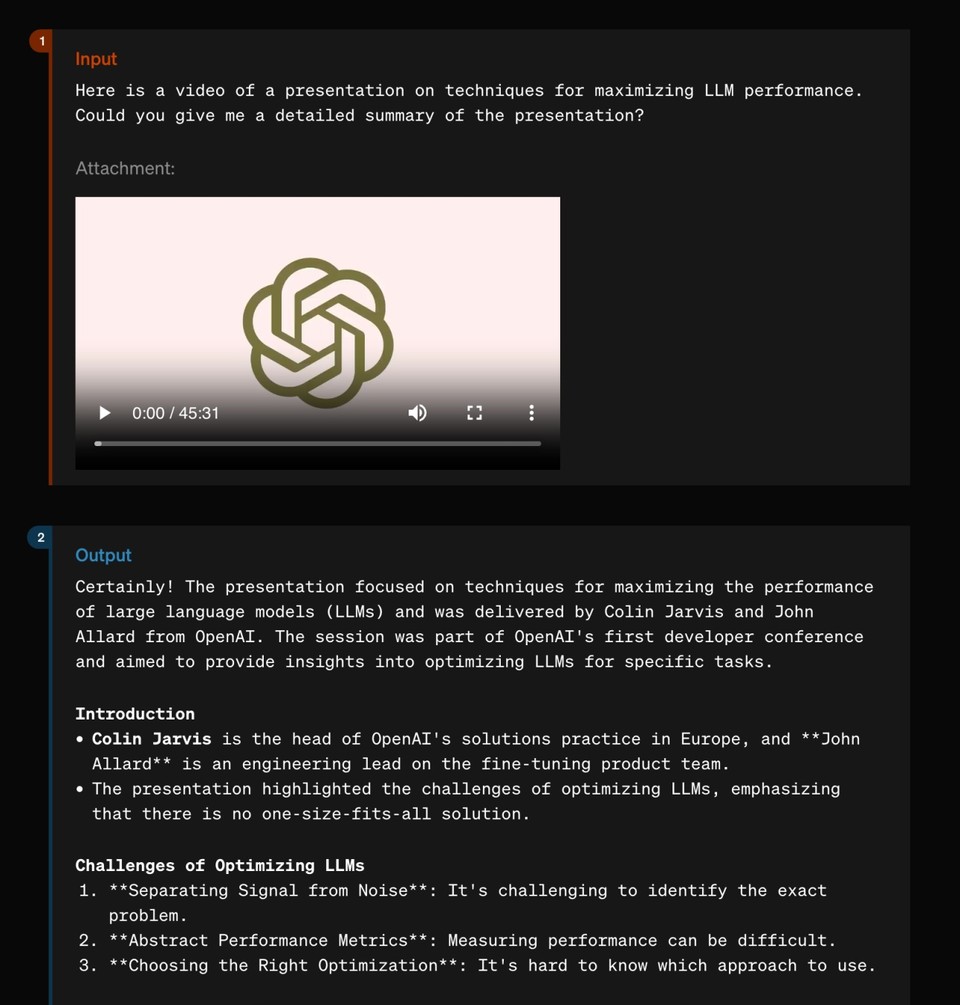

3. Video Lecture Summarization

GPT-4o also introduces significant advancements in video lecture summarization. This feature enables the model to condense lengthy video lectures into concise summaries, making it easier for users to understand the key points without having to watch the entire video.

Source: OpenAI

4. Photo to Caricature Generation

Another exciting capability of GPT-4o is its ability to generate caricatures from photos. Users can upload a picture of a person, and the model will create a stylized, exaggerated version of their features in the form of a caricature.

Source: OpenAI

5. Improved Non-English Language Support

GPT-4o has a new tokenizer that provides better support for non-English languages across 20 representative language families. It allows the GPT-4o model to understand and generate text better in a wider range of languages.

How to use GPT-4o?

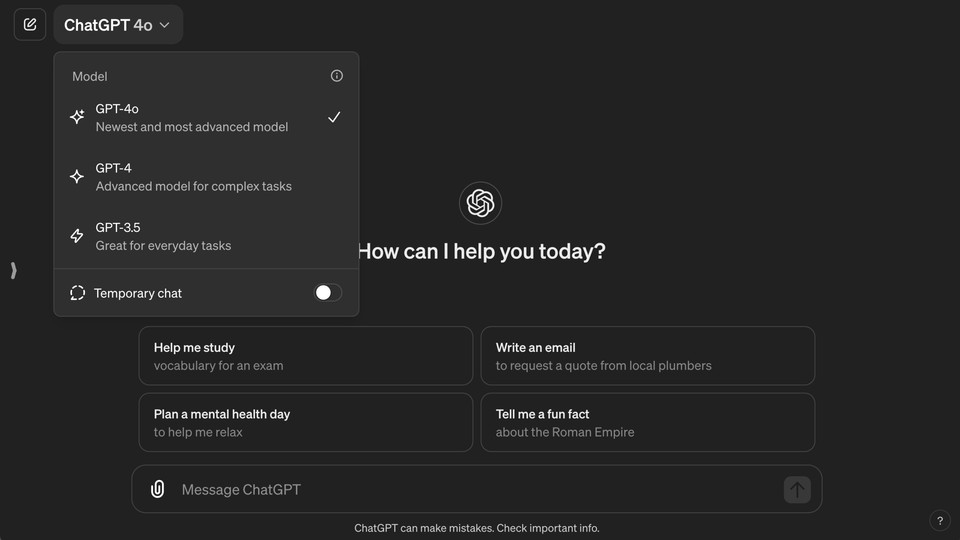

GPT-4o is currently available to paying customers through the OpenAI API. Users of GPT-4 or GPT-4 Turbo should evaluate switching to this more advanced and efficient model.

Additionally, ChatGPT Plus users can now access the GPT-4o model. They can switch the model from GPT-4 to GPT-4o from the top left corner of the screen. OpenAI plans to add the GPT-4o model to the ChatGPT free plan in the coming weeks to make it accessible to everyone.

Developers can also access GPT-4o through the OpenAI Developer platform and integrate It into their applications via API keys. OpenAI also provides the necessary documentation to facilitate easy integrations.

In addition to GPT-4o, explore Fliki, an AI-based content creation suite ideal for effortlessly transforming text-based content into professional-grade video, audio, and image content. With access to the latest and best models in the industry, Fliki ensures unparalleled quality and innovation in the content creation market.

Conclusion

In conclusion, the introduction of GPT-4o marks a giant leap in the field of AI, particularly in enhancing human-computer interactions. With its multimodal capabilities and streamlined approach, GPT-4o opens up a world of possibilities for users across various domains.

From education and healthcare to business and beyond, GPT-4o offers unprecedented versatility and efficiency. OpenAI has paved the way for more natural and intuitive interactions between humans and AI by seamlessly integrating text, audio, and image processing into a single model.

While GPT-4o represents a leap forward in AI technology, it is essential to recognize that we are only scratching the surface of its potential. As OpenAI continue to explore and refine the model, we can expect to see even more incredible advancements in the years to come.

Whether you're a tech enthusiast, a business owner, or simply curious about the possibilities of AI, GPT-4o offers something for everyone. So, why not discover what this revolutionary technology can do for you? The future of human-computer interaction is here, and it's called GPT-4o.