Introduction

Did you know that the value of the AI industry is expected to skyrocket by more than 13 times in the next seven years? Large language models (LLMs) play a crucial role in the heart of this technological revolution, shaping how machines understand and generate human language.

Gemma is a family of open-language models developed by Google. Gemma is like a powerhouse for understanding and working with human language, and it comes at a time when the demand for advanced language models is higher than ever. In simpler terms, Gemma is here to make AI-powered language processing more accessible and powerful for everyone.

In this guide, we will break down what Gemma is all about. We'll explore its inner workings, demystify the training process, see how well it performs, and, most importantly, learn how to use it effectively. Let's discover how Gemma aligns with the booming growth of the AI industry and how it stands out as an exciting innovation in natural language processing.

What is Google Gema?

Google Gemma is a family of lightweight, state-of-the-art open models developed by Google DeepMind and other teams across Google. These models are built from the same research and technology used to create the Gemini models. Gemma models are designed to assist developers and researchers in building AI applications. They offer state-of-the-art performance at size, supporting various tools and systems, multi-framework tools, cross-device compatibility, and optimization across multiple AI hardware platforms like NVIDIA GPUs and Google Cloud TPUs.

Gemma models are available in two sizes: Gemma 2B and Gemma 7B, each with pre-trained and instruction-tuned variants. Google has also released a Responsible Generative AI Toolkit to provide guidance and essential tools for creating safer AI applications with Gemma. The toolkit includes safety classification methodologies, model debugging tools, and best practices for model builders based on Google's experience in developing large language models.

Furthermore, Gemma models can be fine-tuned on your data to adapt to specific application needs. They are optimized across frameworks, tools, and hardware platforms, allowing for easy deployment on Vertex AI and Google Kubernetes Engine (GKE). Gemma models are available worldwide and have various resources to support developer innovation, collaboration, and responsible use of AI models.

How to Use Google Gemma?

Let's learn how to use Google Gema LLM and unlock Gemma's full potential:

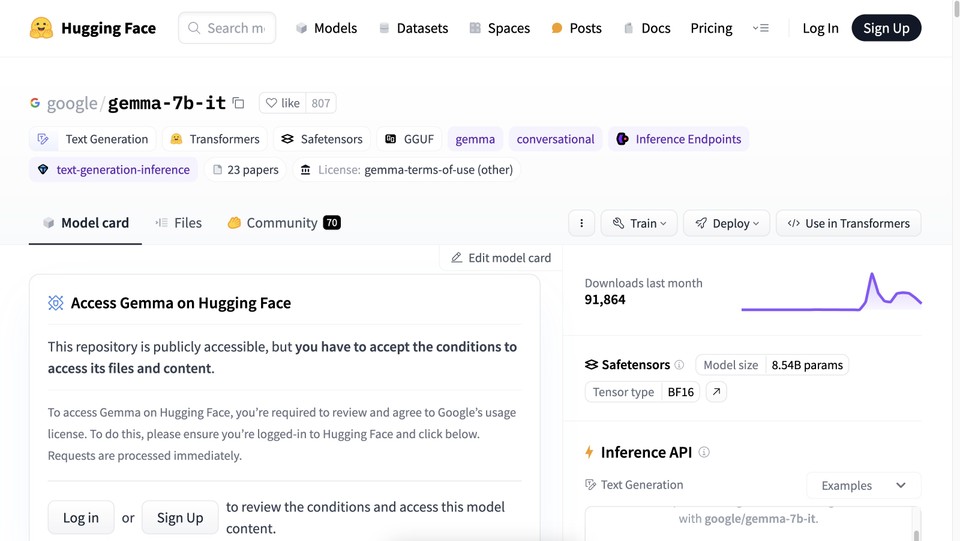

Step 1: Accessing Gemma on HuggingFace

-

Navigate to the Gemma model on HuggingFace platform.

-

Acknowledge the license terms to gain access to Gemma.

Step 2: Installing Necessary Libraries

-

Start by installing the required libraries:

-

Accelerate: Enables distributed and mixed-precision training for efficient model training.

-

Bitsandbytes: Allows quantization of model weights to reduce memory footprint and computation requirements.

-

Transformers: Provides pre-trained language models, tokenizers, and training tools.

-

Huggingface_hub: Facilitates access to the Hugging Face Hub for model sharing and deployment.

-

Step 3: Logging into HuggingFace

-

Use the huggingface-cli login command to log in to HuggingFace.

-

Provide the necessary authentication token obtained from the HuggingFace website.

Step 4: Loading Gemma Model for Inference

-

Import necessary classes for model loading and quantization.

-

Configure model quantization for efficiency.

-

Load the tokenizer for the desired Gemma model.

-

Load the Gemma model itself with the specified quantization configuration.

Step 5: Tokenize Input Text

-

Define the input text or prompt that you want Gemma to process.

-

Tokenize the input text using the loaded tokenizer.

-

Convert the tokenized input to PyTorch tensor for efficient processing.

Step 6: Generating Text Using Gemma

-

Use the model.generate() function to generate text based on the tokenized input.

-

Specify parameters such as maximum output length to control the length of generated text.

Step 7: Decoding the Generated Text

-

Decode the generated text using the tokenizer.decode() function.

-

Convert the token IDs back into human-readable text for interpretation.

By following these easy-to-follow instructions, you can use Google Gemma to perform a range of inference tasks, including text generation and question answering. You can also watch the following video tutorial for visual learning:

Practical Applications of Google Gemma

Unlocking the power of Gemma extends beyond understanding its architecture and performance metrics. This section will explore practical applications where Gemma can be leveraged to tackle real-world challenges effectively.

1. Text Generation

-

Gemma can generate human-like text for various purposes, including content creation, storytelling, and creative writing.

-

It's a valuable tool for marketers, writers, and content creators looking to automate content generation processes.

2. Question Answering

-

Gemma's advanced language understanding capabilities make it a reliable choice for question-answering systems.

-

It can provide relevant answers to various questions, making it useful in educational platforms, customer support systems, and virtual assistants.

3. Language Translation

-

Gemma can be fine-tuned for language translation tasks, enabling seamless communication across different languages.

-

It's a valuable asset for businesses operating in global markets, facilitating multilingual customer support and content localization.

4. Chatbots and Virtual Assistants

-

Gemma can power chatbots and virtual assistants, enabling natural and engaging conversations with users.

-

It can understand user queries, provide relevant responses, and even engage in context-aware conversations.

5. Content Summarization

-

Gemma can summarize large volumes of text, extract key information, and present it concisely.

-

It's useful for summarizing articles, research papers, and documents, saving time and effort for readers and researchers.

6. Sentiment Analysis

-

Gemma's language understanding capabilities make it suitable for sentiment analysis tasks.

-

It can analyze text data to determine the sentiment or emotion expressed, which is valuable for businesses seeking to understand customer feedback and opinions.

7. Code Generation

-

Gemma can generate code snippets based on natural language prompts, assisting developers in writing code more efficiently.

-

It's useful for educational platforms, coding assistance tools, and software development projects.

8. Personalized Content Recommendations

-

Gemma can analyze user preferences and behavior to generate personalized content recommendations.

-

It's beneficial for content platforms, e-commerce websites, and streaming services to enhance user experience and engagement.

By exploring these practical applications, you'll gain insights into how Gemma can be integrated into various domains and workflows to enhance productivity, efficiency, and user experience.

Conclusion

As we conclude this exploration of Gemma, it's evident that this open language model is not just a tool for today but a catalyst for shaping the future of AI. From simplifying complex coding tasks to enhancing user interactions with virtual assistants, Gemma opens up a world of possibilities.

However, with great power comes great responsibility. Before integrating Gemma into production systems, thorough safety testing specific to each use case is imperative. Responsible deployment ensures that Gemma's benefits are harnessed without compromising ethical considerations.

In the dynamic journey of artificial intelligence, Gemma marks a significant milestone. Whether you're a seasoned practitioner or a curious AI enthusiast, the key lies in exploring Gemma's capabilities and integrating them into your projects to unlock new dimensions in language processing.