Introduction

AI has come a long way since its early days. With the recent release of OpenAI o1, the landscape of artificial intelligence has entered an exciting new chapter. Imagine having an AI capable of complex reasoning, one that not only understands but solves intricate problems faster than a human. Sounds like something from the future, right? Well, that future is now. Today, I'm sharing my experience exploring OpenAI o1, an AI model that truly changes the game.

Why OpenAI o1 is a Big Deal

We’ve all heard the buzz around OpenAI's recent advancements, but OpenAI o1 marks a significant leap forward in AI's reasoning abilities. This isn’t just another update; it’s a whole new approach to solving problems—especially the complex ones. Before I dive into my thoughts, let’s break down what makes this model so special.

We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond. These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. https://t.co/peKzzKX1bu

— OpenAI (@OpenAI) September 12, 2024

OpenAI o1 vs GPT-4o: What’s the Difference

While GPT-4o has already impressed many of us, OpenAI o1 takes things to the next level. It tackles more difficult tasks like math and coding with greater accuracy, thanks to its new training method. OpenAI has trained OpenAI o1 using reinforcement learning, which rewards the system for getting things right and penalizes it for errors, refining its ability to process information step by step—just like how you and I would.

For example, when I tested it with a complex math problem, OpenAI o1 didn’t just spit out an answer—it showed me the entire thought process. It was fascinating to see how it "thought" through the problem, much like a human brainstorming ideas. Here’s a video from their official YouTube channel:

My First Interaction with OpenAI o1

I had the chance to test OpenAI o1 myself, and let me tell you, it was a completely different experience compared to previous models like GPT-4o. When I prompted OpenAI o1 with a tricky question about AI reasoning and coding, it took a moment—pausing to “think” before responding. In fact, it even verbalized its steps: “I’m curious about…,” “Let me consider this…,” and eventually, “Here’s what I’ve concluded.” It felt more like a collaborative problem-solving partner.

And sure, while it’s still not perfect (there were moments where it didn’t quite hit the mark), the improvement was palpable. The model’s ability to handle multi-step reasoning, like complex coding challenges, was impressive. Here’s a video of it generating a complete game from scratch:

One thing that caught my attention was its clarity in explaining how it arrived at a solution—something that felt eerily human. If you’ve used AI models before, you know that sometimes they can go off-track—producing what we call “hallucinations” or incorrect outputs. OpenAI’s OpenAI o1 is designed to reduce these incidents. In my experience, I noticed fewer random or incorrect responses compared to GPT-4o. However, OpenAI admits they haven’t completely eliminated hallucinations just yet.

OpenAI o1 vs GPT-4o - Which is better for generating video scripts?

Curious about just how much OpenAI o1 had improved over GPT-4o, I decided to run a little experiment. I crafted a detailed prompt designed to test their creative and reasoning abilities in generating engaging content. The idea was simple: give both models the same task and see who does it better.

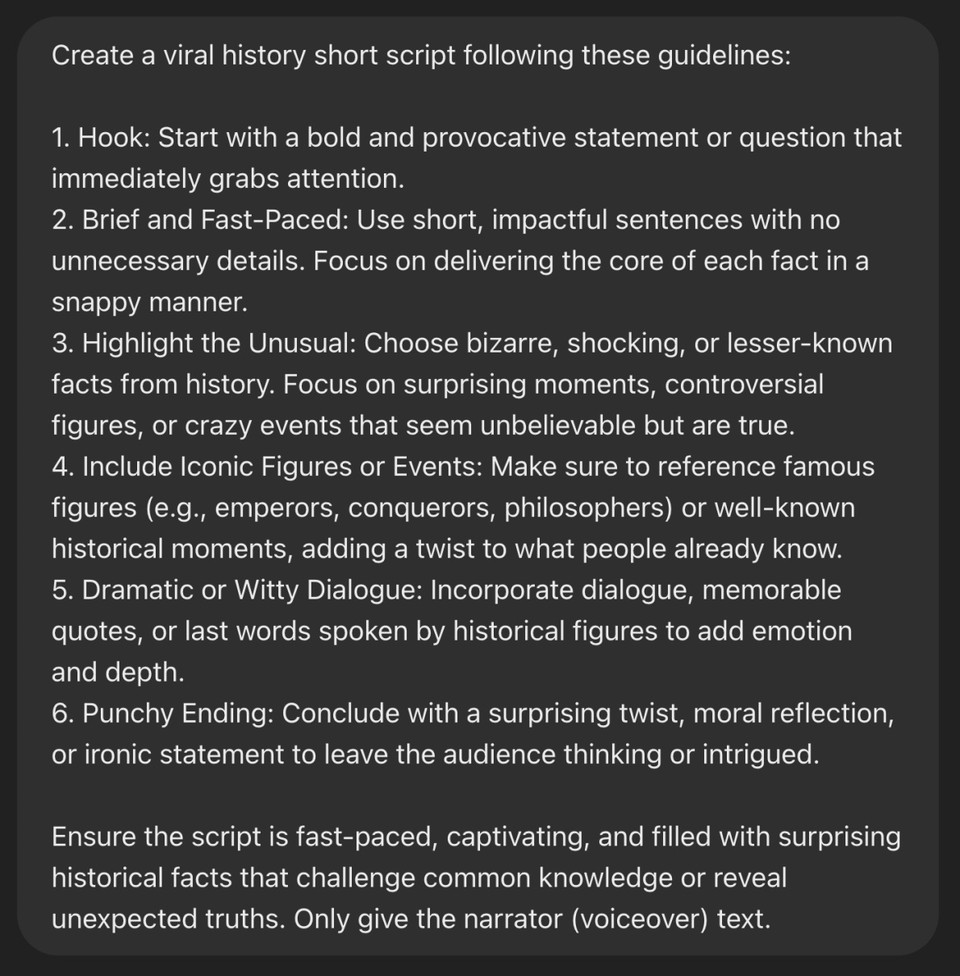

The Prompt

I asked both models to create a viral history short script following specific guidelines:

-

Hook: Start with a bold and provocative statement or question that immediately grabs attention.

-

Brief and Fast-Paced: Use short, impactful sentences with no unnecessary details.

-

Highlight the Unusual: Choose bizarre, shocking, or lesser-known facts from history.

-

Include Iconic Figures or Events: Reference famous figures or well-known historical moments with a twist.

-

Dramatic or Witty Dialogue: Incorporate dialogue or memorable quotes to add emotion and depth.

-

Punchy Ending: Conclude with a surprising twist or ironic statement to leave the audience intrigued.

The Test

I ran this prompt through both GPT-4o and OpenAI o1 to see how each would handle the task.

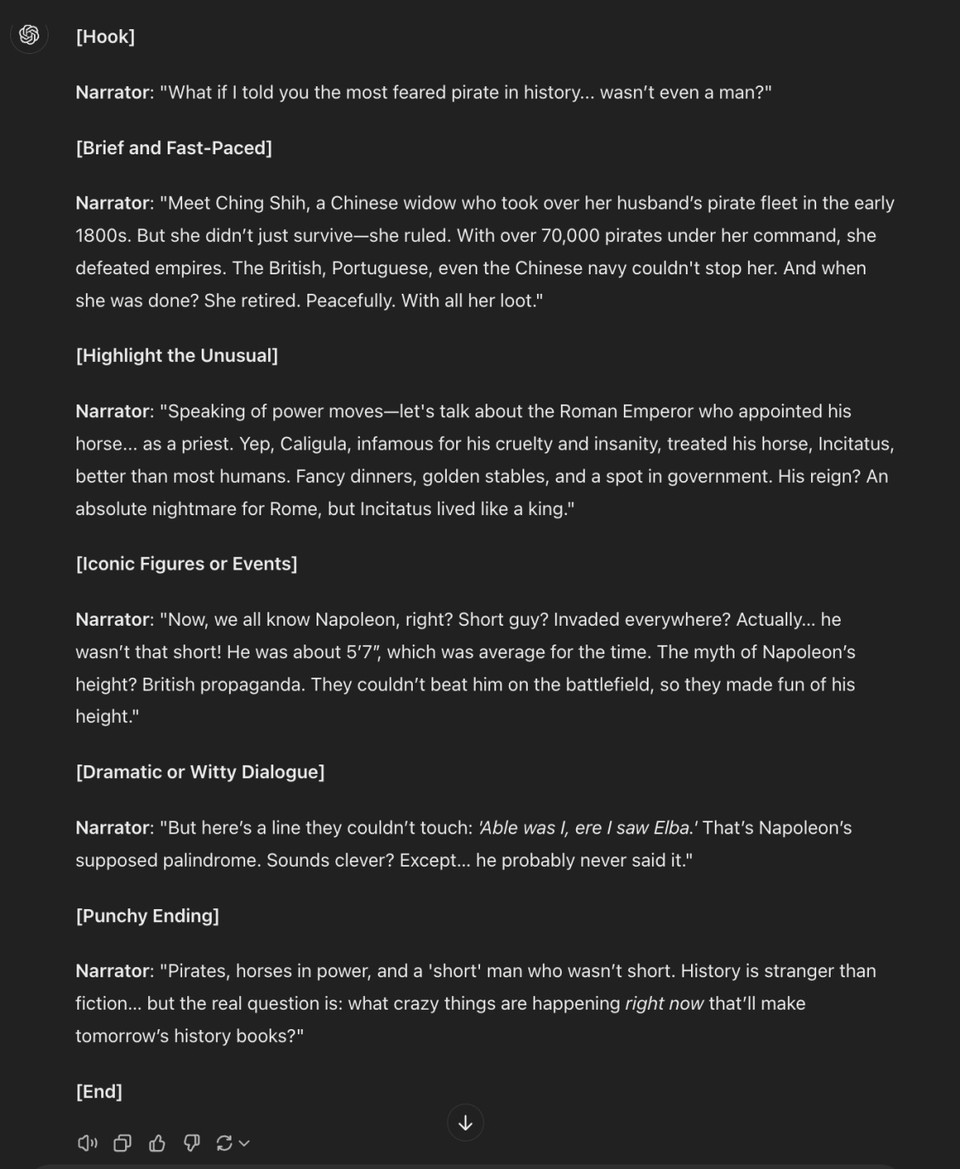

GPT-4o's Responses:

Response 1:

Response 2:

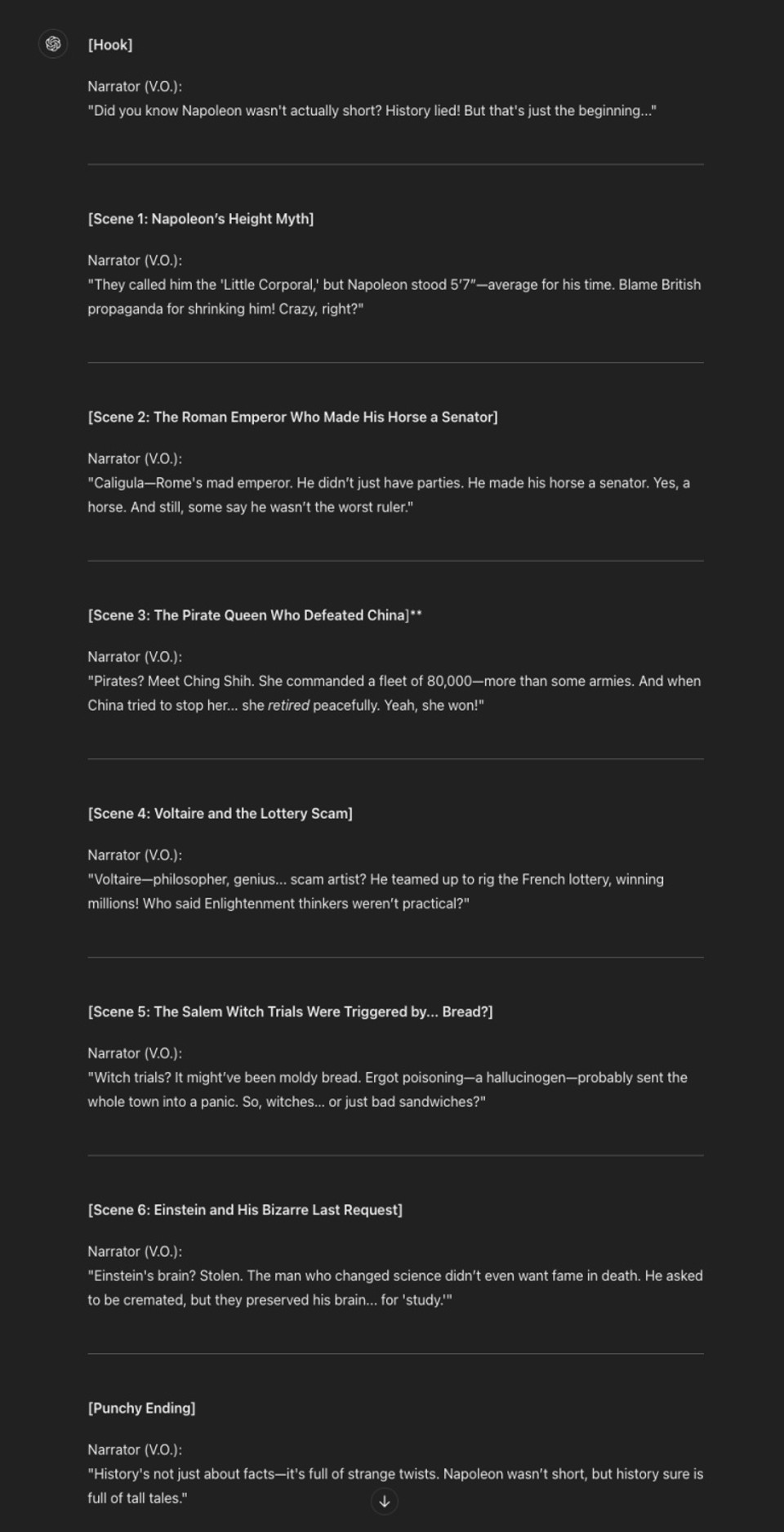

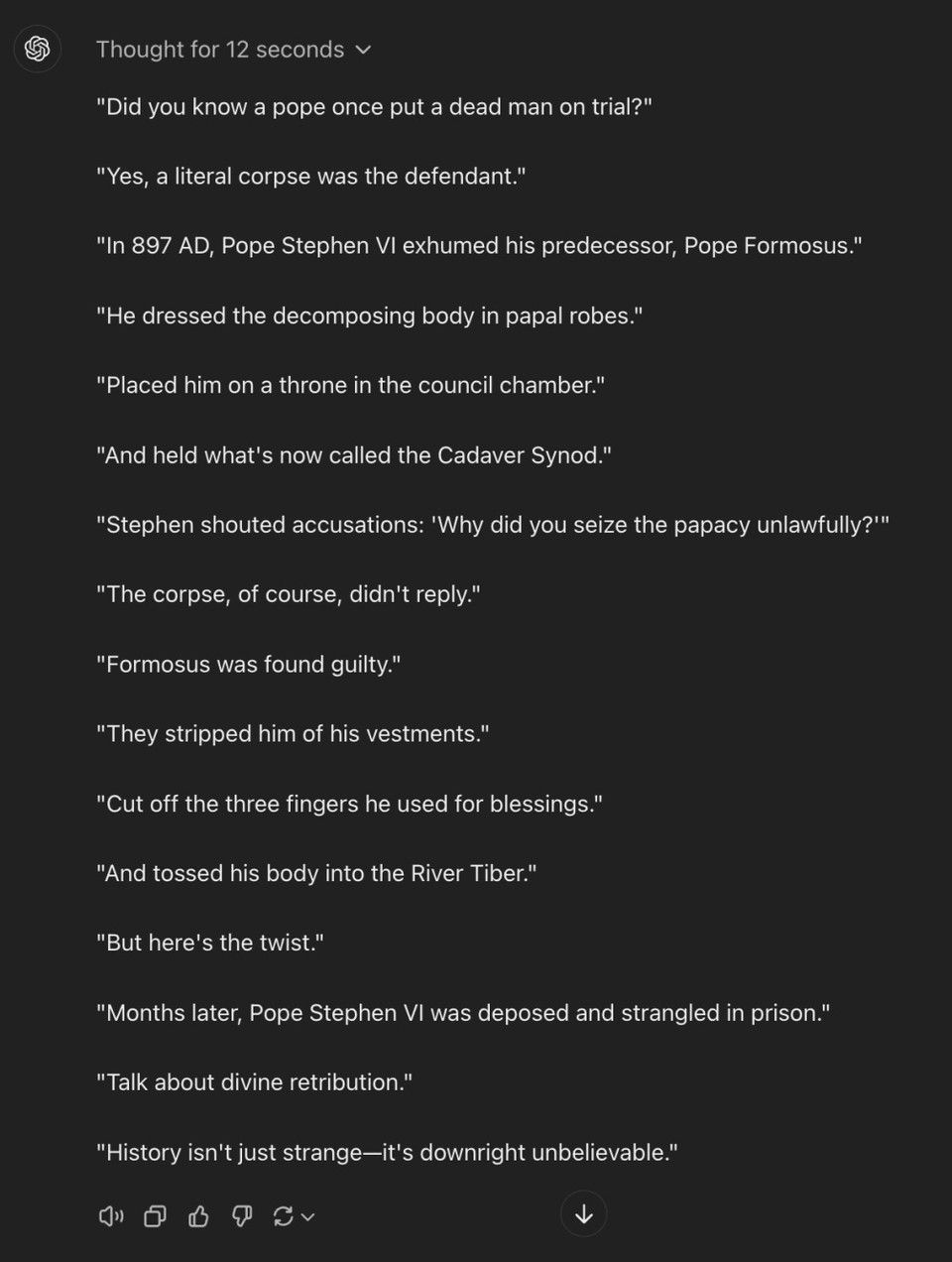

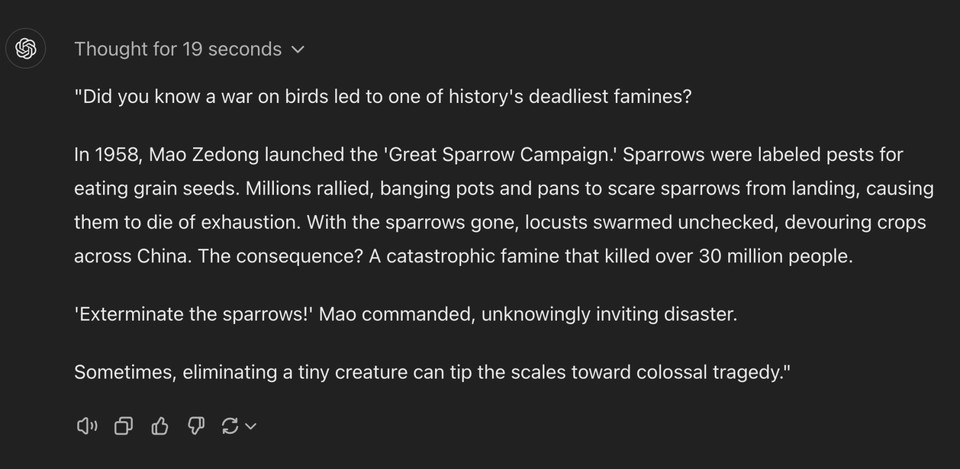

OpenAI o1 Responses:

Response 1:

Response 2:

Analyzing the Results

Both models produced interesting scripts, but there were notable differences.

GPT-4o Strengths

-

Variety: GPT-4o covered multiple historical events in each script, giving a broad overview of surprising facts.

-

Familiarity with a Twist: It included well-known figures like Napoleon and Einstein but added lesser-known details about them.

-

Fast-Paced Style: The scripts were brief and moved quickly from one fact to another, keeping the pace lively.

Areas Where GPT-4o Fell Short

-

Depth: Due to covering many topics, it didn't delve deeply into any single event, which made some facts feel surface-level.

-

Surprise Factor: Some of the "surprising" facts, like Napoleon's height myth, are relatively well-known, so they didn't pack as much punch.

OpenAI o1 Strengths

-

Deep Dive into the Unusual: OpenAI o1 focused on single, bizarre historical events, providing more detail and creating a more immersive experience.

-

Emotional Engagement: By honing in on the macabre trial of a dead pope and the tragic consequences of Mao's campaign, the scripts elicited stronger emotional responses.

-

Adherence to Guidelines: OpenAI o1 excelled at highlighting the unusual and included dramatic dialogue that added depth.

Areas Where OpenAI o1 Could Improve

-

Limited Variety: Focusing on one event per script meant less diversity in content.

-

Pacing: While detailed, the scripts risked losing the "fast-paced" element by spending more time on a single story.

My Takeaway

After comparing the outputs, OpenAI o1 clearly demonstrated its enhanced reasoning and creative capabilities. Its scripts were not just collections of facts but were crafted narratives that drew me in and left a lasting impression. The way OpenAI o1 wove details into a compelling story showcased its ability to think through the prompt more like a human would.

GPT-4o did a commendable job, but it felt more like a rapid-fire list of interesting tidbits. While engaging, it lacked the depth and emotional resonance that OpenAI o1 provided.

How OpenAI o1 Could Change Coding and Problem-Solving

As someone who’s dabbled in coding, I found one of the standout features of OpenAI o1 to be its performance with programming tasks. I threw a complex coding challenge at it—a problem that would normally take me hours to solve. OpenAI o1 not only provided a working solution but explained each step in a way that was easy to follow.

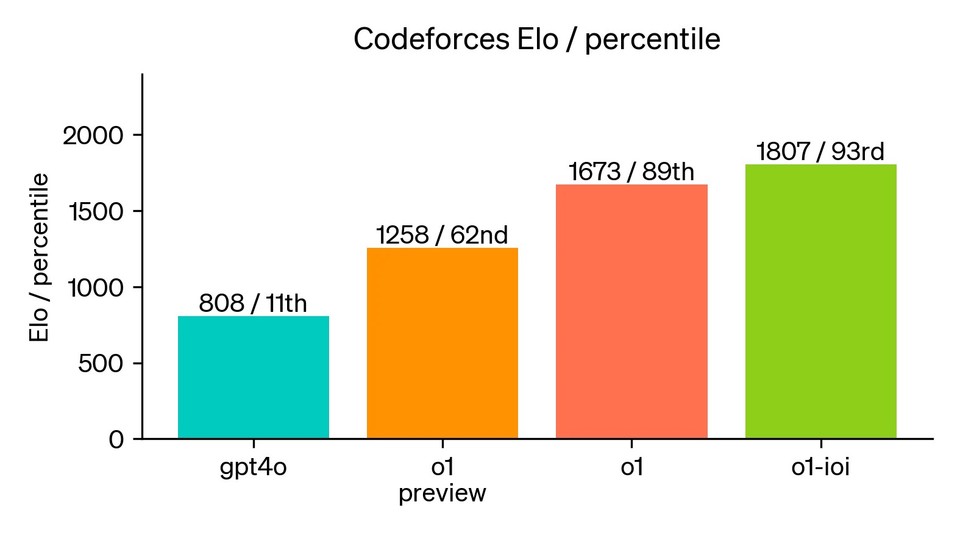

In fact, OpenAI has tested OpenAI o1 in programming competitions like Codeforces, where it ranked in the 89th percentile. That’s right—OpenAI o1 is out there, going toe-to-toe with some of the brightest minds in coding, and it’s holding its own!

OpenAI has made it clear that reasoning is the key to future AI advancements, and OpenAI o1 is just the beginning. Imagine an AI that can assist in high-level research, medical diagnosis, or even complex engineering tasks. We’re not quite there yet, but OpenAI o1 feels like the first real step toward that goal.

One thing I’m really excited about is the potential for AI to evolve into agents—autonomous systems capable of not just answering questions but making decisions and taking actions on their own. OpenAI o1 is far from being an “agent,” but its reasoning abilities are a glimpse into what’s possible.

Is OpenAI o1 Worth the Hype?

The release of OpenAI o1 has sparked plenty of excitement, but is it worth the hype? I think so. It’s not just about having another AI model; it’s about pushing the boundaries of what AI can achieve. With its advanced reasoning capabilities and reduced hallucinations, OpenAI o1 is a powerful tool for developers, researchers, and anyone tackling complex problems.

But let’s be real—OpenAI o1 isn’t perfect. It’s more expensive than GPT-4o, and it’s not as fast. For many, the cost might be a barrier, especially since OpenAI o1 costs $15 per 1 million input tokens and $60 per 1 million output tokens. This is three times the cost of GPT-4o, which means that for some projects, OpenAI o1 may not be the most practical option.

Final words

In the grand scheme of things, OpenAI o1 represents a significant leap in AI technology. It’s a step closer to human-like reasoning, solving problems with more precision and thoughtfulness than previous models. While it’s still early days for this new line of AI models, I’m excited to see where OpenAI takes it next.

If you’re someone who relies on AI for coding, problem-solving, or research, OpenAI o1 is a game-changer. Sure, it has its limitations, but the fact that we now have an AI capable of thinking through problems in a human-like way is truly groundbreaking. The next time you’re faced with a complex challenge, you might just want to see what OpenAI o1 can do for you.