Introduction

Stability AI's model has generated 12.59 billion AI images, which accounts for 80% of all AI-generated images. This statistic not only underscores the dominance of Stable Diffusion in the AI landscape but also paves the way for a groundbreaking leap forward in generative AI Video Generation.

On November 21, 2023, Stability AI proudly announced Stable Video Diffusion, a revolutionary foundation model for generative video, inheriting the prowess of its image-centric predecessor. In this blog post, we'll delve into the intricacies of Stable Video Diffusion, exploring its adaptability across various video applications and competitive performance metrics, and explore how to be among the first ones to try out this emerging technology.

Stay tuned as we explore the potential behind this state-of-the-art generative AI video model and learn how it's poised to redefine the landscape of video synthesis and beyond. Whether you're an enthusiast, a developer, or a curious mind in AI, this is your gateway to understanding and harnessing the power of Stable Video Diffusion. Let's dive in!

What is Stable Video Diffusion?

In the rapidly evolving landscape of AI, Stability AI introduces Stable Video Diffusion (SVD), the latest stride in generative video models. Released on November 21, 2023, Stable Video Diffusion marks a significant evolution from its predecessor, Stable Diffusion, focusing on the dynamic realm of video synthesis.

At its core, Stable Video Diffusion is a foundation model designed to generate video content through advanced AI algorithms. Developed with adaptability in mind, it can seamlessly integrate into various downstream tasks, showcasing its versatility in applications like multi-view synthesis from a single image. This adaptability sets it apart and positions it as a tool with potential applications in diverse sectors such as Advertising, Education, and Entertainment.

Two-image Models

Stable Video Diffusion is unveiled in the form of two image-to-video models, each capable of generating 14 and 25 frames at customizable frame rates ranging from 3 to 30 FPS. Early external evaluations indicate that these models surpass leading closed counterparts in user preference studies, solidifying Stability AI's commitment to delivering cutting-edge and competitive solutions.

Exclusive for Research

It's essential to note that, as of its initial release, Stable Video Diffusion is exclusively intended for research purposes. While Stability AI actively seeks user insights and feedback to enhance the model, the cautionary note emphasizes that it is not yet intended for real-world or commercial applications. This measured approach aligns with Stability AI's commitment to refining and advancing its models based on user feedback and safety considerations.

Stable Video Diffusion emerges as a testament to Stability AI's dedication to amplifying human intelligence across various modalities. From image and language to audio, 3D, and code models, SVD extends the company's diverse portfolio, contributing to an ever-expanding suite of open-source AI models.

As we navigate through the technical capabilities and potential applications of Stable Video Diffusion, we invite you to join us in understanding this cutting-edge technology that promises to reshape the landscape of generative video models.

How to Use Stable Video Diffusion

Stable Video Diffusion (SVD) emerges as a cutting-edge generative AI video model, offering a transformative way to convert images into dynamic videos. In this section, we'll explore two user-friendly methods for utilizing Stable Video Diffusion: through the Replicate website and the Google Colab notebook.

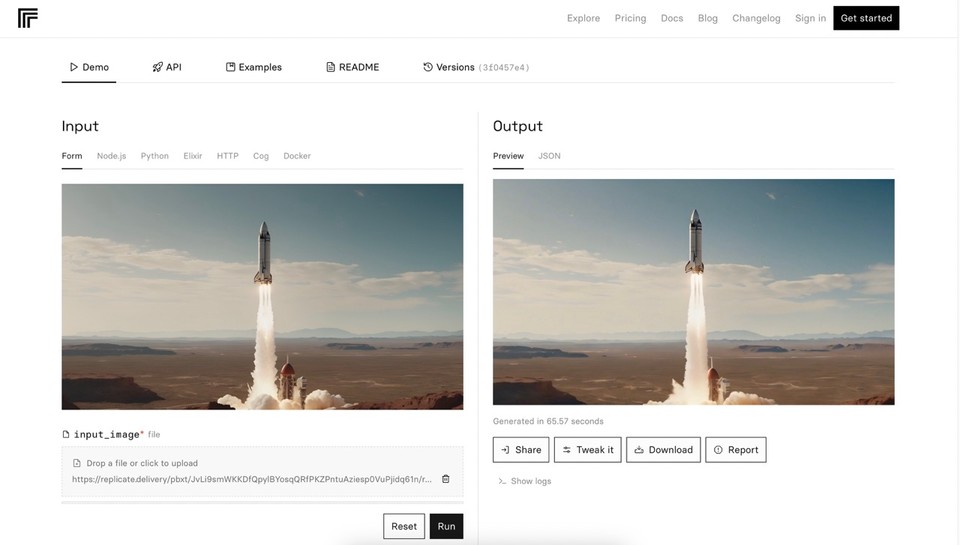

Replicate Website Method

-

Access the Replicate link: Stable Video Diffusion on Replicate.

-

Upload your images and select the desired output format.

-

The Replicate website, running on Nvidia A40 (Large) GPU hardware, will generate a video based on the provided images.

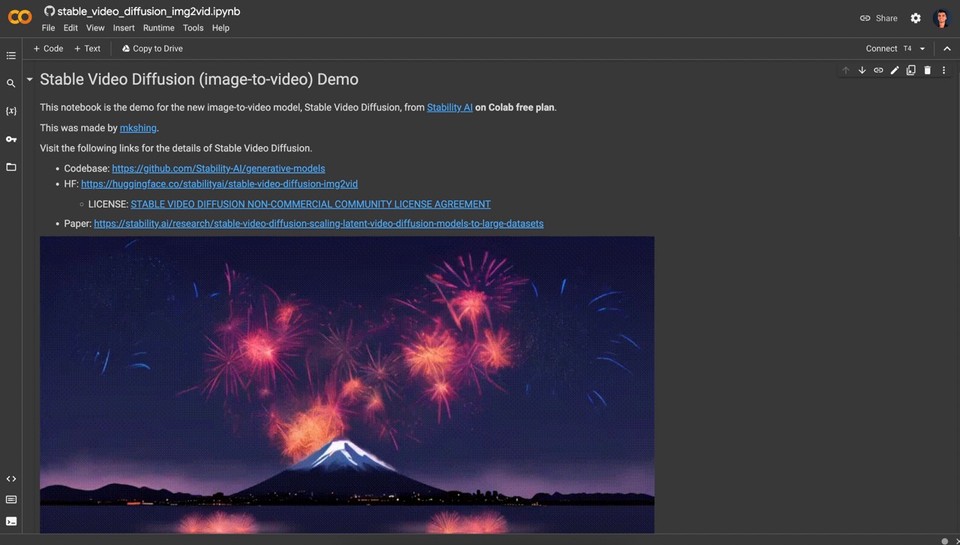

Google Colab Notebook Method

-

Access the Colab notebook link: Stable Video Diffusion on Google Colab.

-

Follow the step-by-step guide provided in the notebook.

-

The notebook includes instructions on installing necessary libraries, setting up the Move2Move extension, and generating videos from images.

Inside the Google Colab Notebook:

-

The notebook serves as a demonstration of the Stable Video Diffusion image-to-video model on Colab's free plan.

-

Setup instructions include installing required packages, including those from Stability AI's GitHub repository.

-

A "Colab hack for SVD" section addresses specific configurations for Stable Video Diffusion.

-

Users can download weights, load the model, and run the generation process within the Colab environment.

Embark on your creative journey with Stable Video Diffusion, leveraging its capabilities through these accessible and user-friendly methods. Whether through Replicate or Google Colab, immerse yourself in the power of generative AI for dynamic video synthesis. Remember, this technology is at the forefront of research, and your insights can play a crucial role in shaping its future applications.

User Experiences with Stable Video Diffusion

Exploration and Testing

Insight: One user installed Stable Video Diffusion (SVD) for testing, noting its generally stable behavior but occasional wild creativity.

Observations: Conducted tests with and without Topaz Video AI, showcasing diverse outcomes with interpolation at 60fps and 2x upscale.

Key Takeaway: The balance between stability and creative divergence in SVD's behavior.

🌟 STABLE VIDEO DIFFUSION I installed SVD and I did a few tests to see where we are with the tech. It's a good start, it behaves pretty stable most of the time, but it also goes super wild sometimes. Below are four examples with and without Topaz Video AI (interpolation at… pic.twitter.com/sRpIYvJ2X0

— Alex Patrascu (@maxescu) November 23, 2023

Leap in Video Quality

Insight: Another user expresses awe at the remarkable quality of AI-generated video using Stable Video Diffusion.

Observations: Utilizes SVD alongside Topaz Labs for interpolation (6fps to 24fps), comparing the resulting quality to cinema standards.

Key Takeaway: SVD's significant advancement in achieving cinema-quality video.

AI generated video just got SCARY good! This uses Stable Video Diffusion, which released 2 days ago and Topaz Labs to interpolate 6fps to 24fps. Cinema quality. pic.twitter.com/TZbOoo35Gh

— Deedy (@debarghya_das) November 24, 2023

Empowering Creativity on Google Colab

Insight: A user shares SVD's availability on Google Colab, emphasizing its rapid video generation and autonomous creative choices.

Observations: Generates 3 seconds of video in about 30 seconds using an A100 GPU on Colab+.

Key Takeaway: SVD's accessibility and autonomy in creative decision-making on Google Colab.

SDV (Stable Diffusion Image To Video) Google Colab available here for anyone who wants to play along at home.https://t.co/rnFQ9c4IcS Generates 3 seconds of video in about 30 seconds using an A100 GPU on Colab+ No control of the actual video in any way at all (yet), but it… pic.twitter.com/SRUqPYwOtf

— Steve Mills (@SteveMills) November 24, 2023

Replicate Exploration and Cost Considerations

Insight: Another user utilizes SVD on Replicate, detailing the settings and highlighting cost considerations.

Observations: Applies SVD on Replicate with specific settings, including a 25_frames_with_svd_XT option. Notes Replicate's request for credit card information after a few initial free runs, disclosing a cost of about $0.07 per video.

Key Takeaway: Practical insights into using SVD on Replicate, including customization options and associated costs.

Stable Video Diffusion - Image to Video I used Stable Video Diffusion (SVD) on Replicate. Thanks to @fofrAI Replicate link, Midjourney Images and prompts inside. Few things.. 1) I just used the images + default settings (except for video_length for which I chose… pic.twitter.com/ZkcbgORDMN

— Anu Aakash (@anukaakash) November 24, 2023

These shared experiences offer a multifaceted view of Stable Video Diffusion, illustrating its stability, creative potential, and the varying approaches users take to explore its capabilities.

Conclusion

Wrapping up our look at Stable Video Diffusion, it's clear this tech isn't just about turning pictures into videos – it's about sparking creativity in a whole new way. The Twitter users' stories give us a sneak peek into what Stable Video Diffusion is all about.

From testing its stability to being amazed by the quality of the videos, each user's experience adds a unique angle to the story of Stable Video Diffusion. It's like a big step forward in making videos that look as good as you'd see in the movies.

Whether you're trying it out on Google Colab or Replicate, Stable Video Diffusion is all about making creativity accessible. The fact that it can make creative choices independently, as seen in user experiments, makes you wonder about all the cool things it could do. However, it is currently a research preview, Stability AI wants to hear from users to make it better and safer before it goes mainstream.

It's essential to acknowledge that Stable Video Diffusion is part of a larger ecosystem of innovation in video creation. Alongside other emerging players like Google VideoPoet, it is not just a standalone tool but a catalyst for groundbreaking ideas. The future of video creation is brimming with potential, with Stable Video Diffusion paving the way. We stand at the brink of a new era in video imagination and production, poised for groundbreaking advancements.